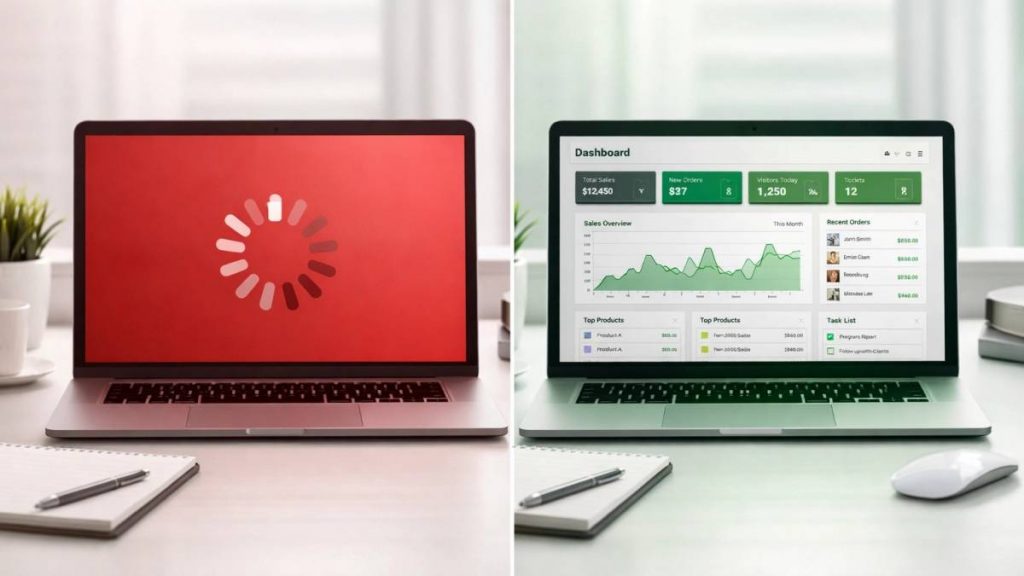

You know that little spinning wheel that shows up when you’re trying to load a page? The one that makes you want to throw your laptop out the window? Oftentimes, that’s not a bandwidth problem; it’s a latency problem.

And if your business runs apps, databases, or any online service, understanding the difference between these two could be the secret to keeping your customers happy (and sticking around).

The Big Mix-Up: Bandwidth vs. Latency

Most people think “faster internet” means more bandwidth. Like, if you upgrade from 100 Mbps to 1 Gbps, everything magically speeds up, right?

Not quite.

Bandwidth is the size of the pipe, how much data you can shove through at once. Think of it like a highway with more lanes. Great for downloading massive files or streaming 4K video.

Latency is the delay between when data leaves your computer and when it returns. Latency is measured in milliseconds (ms). Think of it like the distance you have to drive on that highway before you even get to your destination.

For most business applications, latency is the real killer across your CRM, website, and internal tools. You can have a 10-lane superhighway, but if the server is 1,000 miles away, every click, every database query, and every page load must wait for that round trip.

And here’s the kicker: you can’t cheat physics.

The Speed of Light Isn’t Negotiable

Data travels through fiber optic cables at roughly two-thirds the speed of light. That’s about 124 miles per millisecond. Sounds fast, right?

But when your server is in Oregon, and your team is in Sacramento, you’re looking at a 600-mile round trip. That’s about 5 milliseconds just in travel time, and that’s before you factor in routing, switches, and processing delays.

Now imagine your server is in a massive cloud region in Virginia. That’s 2,500 miles one way. Suddenly, you’re looking at 20+ milliseconds just to say hello. Every. Single. Time.

For a simple web page that makes 50 requests to the server (images, scripts, database calls), those milliseconds add up fast. What could be a snappy, instant experience becomes a sluggish crawl.

What a Few Milliseconds Actually Cost

Let’s get real about the impact: Amazon found that every 100 milliseconds of added latency costs them 1% in sales. Google discovered that adding just half a second (500ms) to search results dropped traffic and revenue by 20%.

If you’re running an e-commerce site, a SaaS product, or even an internal business app, those numbers should make you sit up straight.

Your customers don’t consciously think, “Hmm, this page is 200 milliseconds slower than usual.” They just think, “This site feels slow,” and they bounce. Or worse, they switch to your competitor.

For internal apps, slow performance means your team wastes minutes every day waiting for screens to load, reports to generate, or forms to submit. Multiply that across 20 employees, and you’re losing hours of productivity every single week.

The “Mega-Cloud” Trap

Here’s where businesses get stuck: they assume that the big cloud providers, AWS, Azure, Google Cloud, are always the best option because they’re “enterprise-grade.”

And sure, those platforms are rock-solid. But here’s what they don’t tell you in the marketing brochure: their nearest region might be hundreds of miles away.

AWS’s closest region to Sacramento is usually US-West in Oregon. Azure and Google Cloud? Same deal. If you’re hosting in one of these “mega-cloud” regions, your data is taking a road trip every time someone clicks a button.

Now, if your users are spread across the country or the world, those large regional clouds make sense. You can distribute your app across multiple regions and use CDNs to cache content.

But if your business is local or regional? If most of your users are in Northern California? You’re paying for infrastructure you don’t need while sacrificing the one thing that actually matters: low latency.

The Sacramento Advantage

When your servers are physically located in Sacramento, right where your business and users are, you cut out all that cross-state travel time. Instead of 20+ milliseconds, you’re looking at 1-3 milliseconds. Sometimes less.

That’s the difference between an app that feels instant and one that feels like it’s “thinking” every time you click.

And here’s the thing: you still get all the benefits of a professional data center: redundant power, enterprise-grade cooling, 24/7 security, multiple carrier options, without the latency penalty of hosting hundreds or thousands of miles away.

For businesses serving Sacramento, the Bay Area, or Northern California, it’s a no-brainer. Your users get a faster experience. Your team works more efficiently. And you’re not wasting money on bandwidth trying to compensate for latency.

When “Close” Beats “Fast”

Let’s break this down with a real-world example:

Scenario A: You host your application in AWS Oregon. You’ve got a fat 10 Gbps connection. Bandwidth is not an issue. But every database query, every API call, and every page load must travel 600 miles round-trip. Your average response time? 50-100 milliseconds per request.

Scenario B: You colocate your servers at Datacate in Sacramento. Your connection is “only” 1 Gbps. But your servers are 5 miles from your office and most of your users. Average response time? 5-10 milliseconds.

Which one feels faster to your users? Scenario B: every single time.

The “close” server with less bandwidth wins because latency dominates the user experience for most business applications. You can’t download your way out of a distance problem.

How to Think About Latency for Your Business

Here’s a simple test: if your application makes many back-and-forth requests (like most web apps, dashboards, or database-driven tools), then latency is your enemy.

Ask yourself:

- Where are most of my users located?

- How many round-trip requests does my app make per page load?

- Am I paying for cloud resources I don’t actually need?

If your answer to the first question is “Sacramento” or “Northern California,” and your servers are currently in Oregon, Virginia, or somewhere else far away, you’re leaving performance (and money) on the table.

The Bottom Line

Bandwidth is sexy. Everyone loves talking about gigabit connections and fiber speeds. But for most businesses, latency is what actually matters.

Hosting your data close to your users isn’t just a “nice-to-have.” It’s a competitive edge. It’s the difference between an app that feels instant and one that feels sluggish. It’s the difference between customers who stick around and customers who bounce.

The 1-millisecond advantage is real. And in a world where every click counts, “close” really is the new “fast.”

Want to reduce latency and deliver a faster experience for your users? Learn more about our Sacramento data center and how local colocation can give you the performance edge your business needs.