You’ve done it. After months of planning, vendor meetings, and sleepless nights, your IT infrastructure is finally humming along in its shiny new data center facility. The servers are racked, the cables are connected, and everything looks perfect on paper.

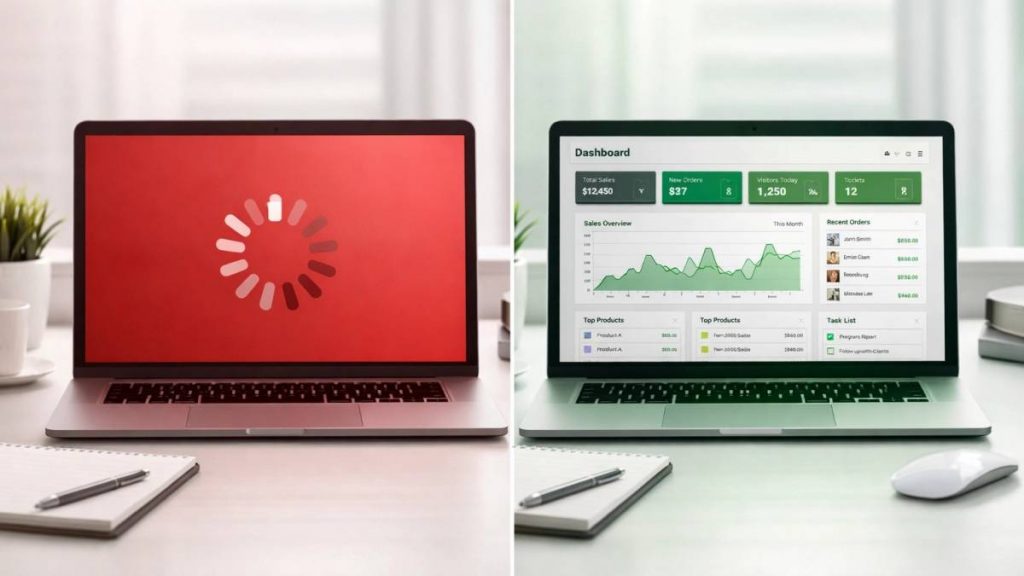

Then reality hits.

It could be unexpected cooling costs that are double what you budgeted. Or the network latency issues that didn’t show up in testing. Perhaps it’s the compliance audit that reveals gaps you never saw coming, or the SLA disputes that suddenly make your “great deal” feel anything but.

The truth is, most businesses experience some form of post-migration surprise. The good news? Most of these pitfalls are predictable and preventable if you know what to look for.

The Infrastructure Reality Check

Power and Cooling Mismatches

One of the most common post-migration shocks comes from power and cooling requirements that don’t align with real-world usage. Your old facility might have masked inefficiencies, or your new provider’s calculations might have been based on ideal conditions rather than your actual workload patterns.

The reality: Your servers might run hotter in the new rack configuration, or your power draw might spike during peak usage in ways that weren’t apparent in testing. Suddenly, you’re looking at higher utility bills or, worse, thermal throttling that impacts performance.

Prevention strategy: Run a full load test for at least 72 hours before considering the migration complete. Monitor power consumption and temperatures under actual working conditions, not just idle states. Document baseline performance metrics from your old facility and compare them directly with those of the new facility.

Network Configuration Gotchas

Network issues can surface weeks or months after migration, when specific use cases or traffic patterns trigger problems that weren’t caught during initial testing. These might include routing inefficiencies, firewall rule conflicts, or bandwidth limitations that only appear under certain conditions.

The reality: That video conference that worked fine in testing might stutter during company-wide meetings. Database replication that seemed smooth might create bottlenecks during month-end processing. Remote access that functioned perfectly might struggle when everyone’s working from home during a snow day.

Prevention strategy: Create realistic stress tests that mirror your actual usage patterns, including peak loads and edge cases. Test all critical applications under various scenarios, and maintain detailed network documentation that can be quickly referenced when issues arise.

The Operational Blind Spots

Documentation Drift

In the rush to get systems operational, documentation often takes a backseat. But those hastily written “temporary” configurations and undocumented workarounds have a way of becoming permanent: until something breaks and no one remembers how it was supposed to work.

The reality: Six months later, when you need to make changes or troubleshoot issues, you’re left playing detective with your own infrastructure. Critical knowledge might exist only in someone’s head, creating dangerous single points of failure.

Prevention strategy: Make documentation updates mandatory on your migration checklist. Assign specific team members to document changes as they happen, not after the fact. Create standardized templates for configurations, procedures, and troubleshooting guides.

Redundancy Assumptions

Just because your new facility has redundant power, cooling, and network connections doesn’t mean your specific setup is taking advantage of them. Many businesses discover too late that their equipment isn’t properly distributed across redundant systems, or that their failover procedures don’t work as expected in the new environment.

The reality: A single point of failure that you thought you’d eliminated might still exist, just in a different form. When that inevitable outage happens, you might find that your “redundant” systems aren’t as independent as you believed.

Prevention strategy: Physically verify redundant pathways and test failover procedures under controlled conditions. Map out every connection and identify potential single points of failure. Don’t assume that because redundancy exists in the facility, your specific configuration is protected.

The Financial Surprises

Hidden Costs and Billing Complexities

Data center billing can be complex, with charges that don’t always align with how you think about your usage. Cross-connects, remote hands services, power usage effectiveness calculations, and bandwidth overages can add up in unexpected ways.

The reality: Your monthly bill might include charges you didn’t anticipate, or billing methods that differ from your previous facility. What seems like a cost-effective move can turn out to be more expensive once you factor in all the ancillary charges.

Prevention strategy: Request detailed billing examples before signing contracts, including scenarios that match your expected usage patterns. Understand how every service is metered and billed. Build a buffer into your budget for unexpected charges during the first few months.

SLA Misalignment

Service Level Agreements often look great on paper, but can create conflicts when reality doesn’t match expectations. The SLA might guarantee 99.99% uptime, but your definition of “downtime” may differ from the provider’s. The response time commitments might not align with your actual operational needs.

The reality: When issues arise, you might find that the SLA doesn’t provide the protection or recourse you expected. What seemed like comprehensive coverage might have gaps that leave you exposed during critical situations.

Prevention strategy: Review SLAs with your operations team to ensure they align with your actual business requirements. Understand exactly what is and isn’t covered, and how remedies are calculated. Consider negotiating custom SLA terms that better match your specific needs.

Building Your Post-Migration Success Plan

Establish a Monitoring Baseline

Implement comprehensive monitoring from day one in your new facility. Don’t wait for problems to surface: proactively track performance, resource usage, and system health. Compare metrics against your pre-migration baselines to identify trends before they become problems.

Set up alerting thresholds that account for the new environment. What was normal in your old facility might not be normal in the new one, so adjust your monitoring accordingly.

Create Communication Channels

Establish clear communication protocols with your data center provider. Know who to contact for different types of issues, understand escalation procedures, and maintain regular check-ins during your first few months.

Internally, ensure that your team knows how to report and track post-migration issues. Create a feedback loop that captures problems early and documents solutions for future reference.

Plan for the Learning Curve

Accept that there will be a learning curve as your team adapts to the new environment. Build extra support capacity into your staffing plan for the first few months. Consider having key team members document lessons learned and best practices as they emerge.

Don’t rush to optimize everything immediately. Give yourself time to understand how your systems behave in the new environment before making significant changes.

Schedule Regular Health Checks

Plan formal reviews at 30, 60, and 90 days post-migration. Use these checkpoints to assess how well the migration is meeting your expectations, identify any emerging issues, and adjust your approach as needed.

These reviews should cover technical performance, budget alignment, operational efficiency, and team satisfaction. Use the insights to refine your processes and prevent similar issues in future migrations.

The Path Forward

Post-migration success isn’t about avoiding all surprises: it’s about being prepared to handle them effectively when they occur. By understanding common pitfalls and implementing proactive strategies, you can minimize disruptions and ensure that your migration delivers the benefits you expected.

Remember that migration is a process, not an event. Your work doesn’t end when the servers are powered up in the new facility. True success comes from the weeks and months of careful monitoring, adjustment, and optimization that follow.

The businesses that thrive after data center migrations are those that plan for the unexpected, maintain flexibility in their approach, and view post-migration challenges as opportunities to build more resilient and efficient operations.

Your new facility represents an investment in your company’s future. With proper preparation and realistic expectations, you can ensure that the investment pays off exactly as planned: without the costly surprises that catch less prepared organizations off guard.